Across the American government’s political spectrum, some see AI as an existential risk for humanity equivalent to nuclear weapons. Others see it as a technological revolution that can keep the US ahead of China and other competitors. And yet others focus on the practical problems of dealing with chatbots and deep fakes. The White House has provided a way forward.

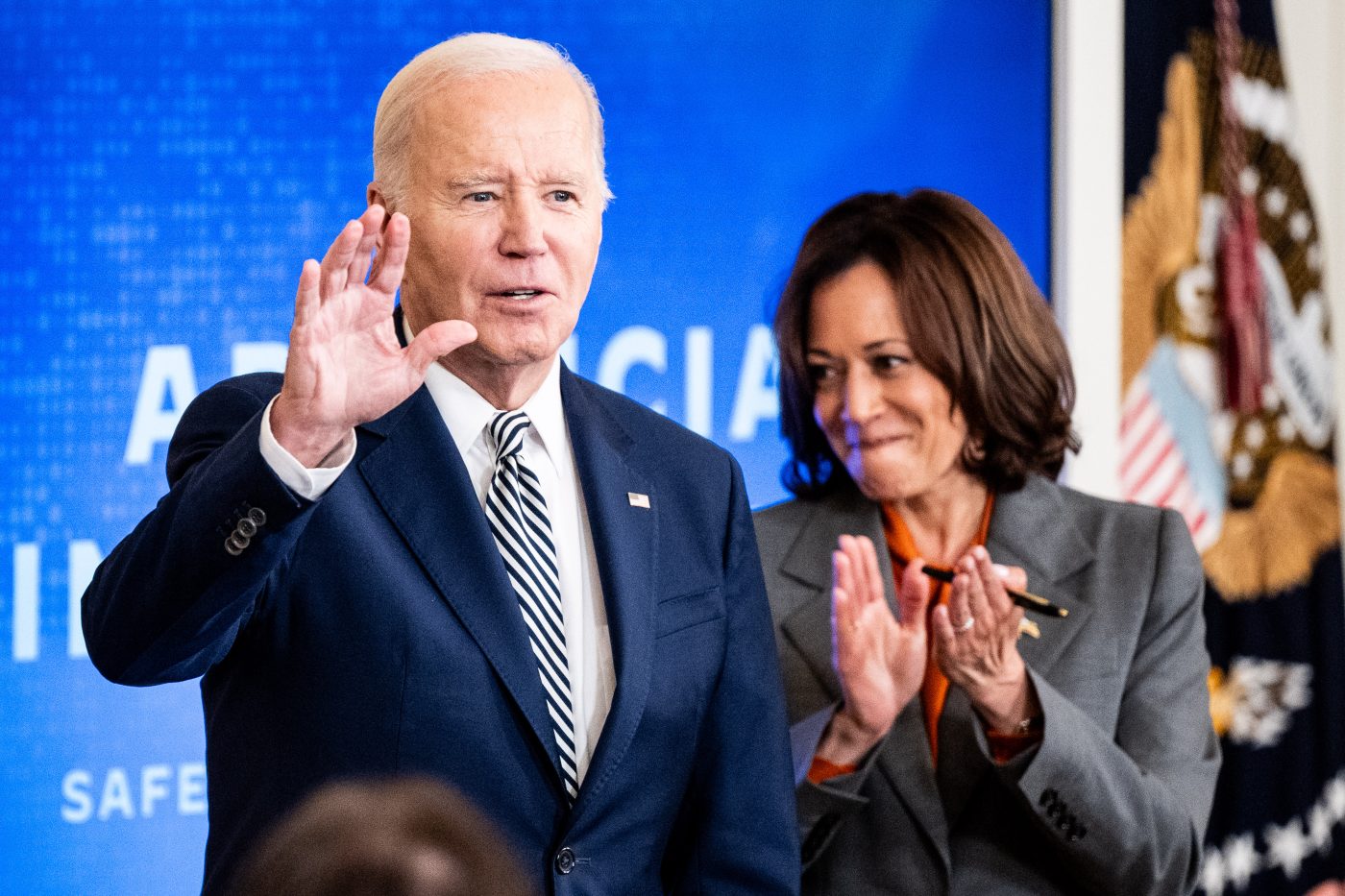

President Joseph Biden’s 111-page Executive Order on Safe, Secure, and Trustworthy Artificial Intelligence attempts to satisfy all these diverse concerns, though some critics claim it risks satisfying none. An executive order is binding only on the Federal Government’s Executive Branch. Unlike Europe’s soon-to-be-completed EU AI Act, it is not legislation that can be applied to all public and private bodies.

Even so, the US has done more to regulate AI than most appreciate. For several years, the US has been advancing its own AI-related laws and broad governance efforts. This new executive order represents not so much an advancement in US AI law as much as a continuation of existing government initiatives.

For alarmists, it includes sweeping controls on AI development. Companies are required to share their safety test results for those systems that can be particularly risky, in accordance with the Defense Protection Act. AI engineering of dangerous biological materials is prohibited. The Federal Trade Commission is directed to tighten its watch on behavior that may endanger consumer rights.

For pragmatists, the White House order includes a warning to the healthcare industry to make sure their products are safe. It addresses the danger of racial, religious, and gender discrimination by issuing guidance against AI-generated bias in the housing market, a concern voiced by civil society organizations. The Council of Economic Advisors is empowered to protect worker’s rights by taking a close look at the effects of AI in the labor market.

For national security hawks, the Biden White House order aims to foster US strategic capabilities. It requires cloud service providers to report foreign customers to the federal government, limiting the ability of foreign countries to train AI models. These moves follow the Biden Administration’s recent strengthening of export controls on the most advanced US AI semiconductors to China.

For AI optimists, the new order aims to strengthen US tech leadership. It provides a path to streamline visa applications of AI experts, and create data privacy regulation, along with incentives to improve skills development for both future and current workers.

Despite the effort by the White House to appease all points of view, opposition is emerging. For regulation skeptics, it’s a textbook example of government rules run amok. For pro-regulation voices, it does not erase the need for Congressional legislation. At the announcement of the AI Executive Order, President Biden himself acknowledged that “we still need Congress to act.”

But congressional action looks unlikely. Although Senate Majority Leader Chuck Schumer has hosted a historic gathering of tech industry and civil society leaders and expressed a desire for legislation, political divisions in Congress and the upcoming 2024 elections block progress.

President Biden’s initiative comes at a crucial time in global efforts to address AI. Ever since Chat GPT burst onto the scene, AI has jumped to the top of digital policy agendas. A swirl of activity is taking place. The UK is holding a much anticipated AI Safety Summit; the UN has announced plans for a new advisory body; the G7 recently agreed on an AI Code of Conduct for companies; China is moving to censor AI-generated chatbots; the OECD is working to create common definitions; and the EU is close to finalizing its binding AI Act.

The new executive order attempts to assert US leadership. It’s a start. Moving forward, Washington will have to work with partners and foes to find a global regulatory regime that balances safety and innovation.

Camilo Torres Casanova is an Intern at CEPA’s Digital Innovation Initiative.

Eduardo Castellet Nogues is a Program Assistant at CEPA’s Digital Innovation Initiative.

Bandwidth is CEPA’s online journal dedicated to advancing transatlantic cooperation on tech policy. All opinions are those of the author and do not necessarily represent the position or views of the institutions they represent or the Center for European Policy Analysis.